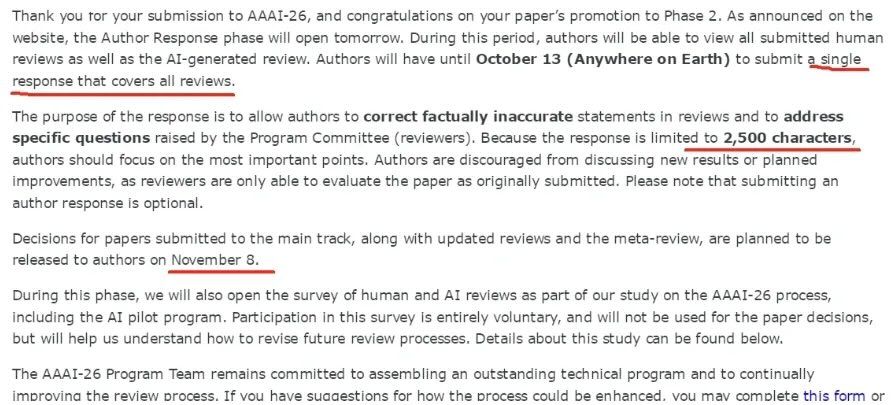

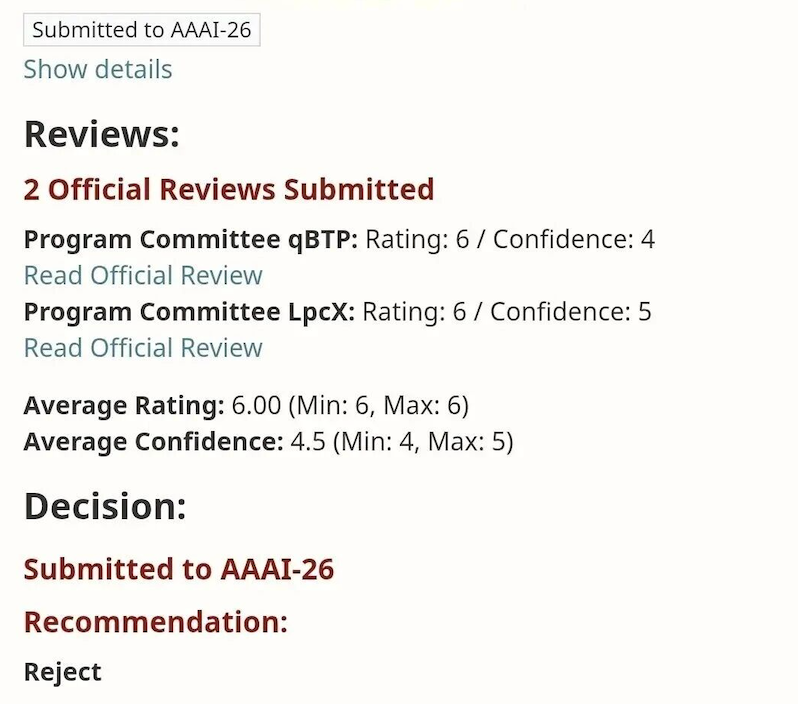

I want to add a bit of my reflection on AI Review

Potential Strengths

Scalability and Efficiency: AI systems could assist in managing the ever-growing number of submissions, reducing workload for human reviewers and accelerating review timelines.

Consistency and Standardization: Automated systems can enforce uniform criteria, potentially reducing variance caused by subjective or inconsistent human judgment.

Augmented Support for Humans: AI could provide structured summaries, highlight methodological issues, or retrieve related prior work, acting as a co-pilot rather than a replacement for human reviewers.

Transparency and Traceability: With criterion-aligned or structured outputs, AI systems might make explicit how particular aspects of a paper were evaluated, offering traceability that complements human interpretation.

Concerns and Limitations

Quality and Depth of Judgment: Peer review is not just about summarization or surface-level critique. Human reviewers often contribute domain expertise, intuition, and contextual reasoning that AI currently struggles to replicate.

Evaluation Metrics Misalignment: Using overlap-based metrics (e.g., ROUGE, BERTScore) may not fully capture the nuanced quality of reviews, which often rely on critical reasoning and qualitative assessment.

Dataset and Generalizability Issues: Many experiments in this space rely on small or narrow datasets (e.g., limited to certain conferences), which risks overfitting and reduces generalizability to other domains.

Reproducibility and Fairness: Reliance on proprietary large language models introduces cost, access, and reproducibility challenges. Comparisons across different model sizes or modalities can also create fairness concerns.

Multimodality and Context Handling: While AI can parse text and visuals, questions remain about whether figures, tables, and extended contexts truly require specialized handling beyond what modern large-context models can already process.

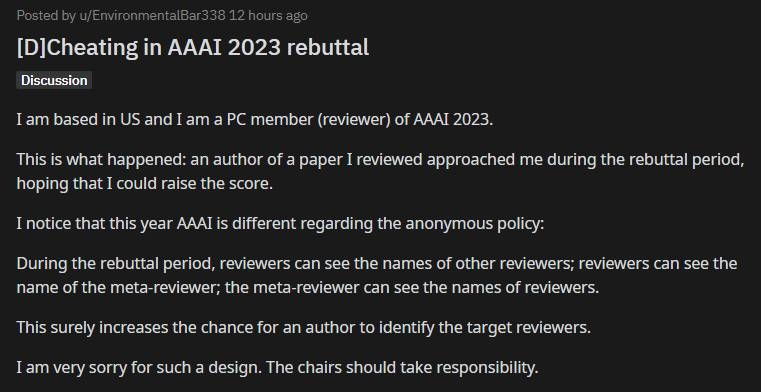

Ethical and Practical Considerations

Human Replacement vs. Human Augmentation: A key concern is whether AI should replace reviewers or assist them. Many argue for augmentation rather than substitution, especially given the subjective and community-driven nature of peer review.

Bias and Trust: AI-generated reviews may inherit biases from training data or evaluation frameworks, raising questions about fairness and transparency in decision-making.

Cost and Sustainability: Running AI review systems at scale may incur significant computational and financial costs, particularly when leveraging closed, high-capacity models.

Accountability: Unlike human reviewers, AI systems cannot be held accountable for their judgments, which complicates trust and governance in academic publishing.

Emerging Attitudes

Skepticism: Many scholars remain unconvinced that AI can capture the essence of peer review, viewing it as reductionist or superficial.

Cautious Optimism: Some see AI as a promising assistant to support human reviewers, especially for summarization, consistency checks, or initial screening.

Call for Rigor: There is a consensus that human evaluation, broader benchmarking, and careful methodological design are critical before integrating AI into the peer review process at scale.

In summary: The use of AI in peer review is seen as an intriguing and potentially useful tool for augmentation, but concerns around motivation, evaluation validity, fairness, and the irreplaceable role of human judgment dominate current attitudes. There is strong agreement that more rigorous evidence and careful deployment strategies are needed before AI can play a central role in scholarly reviewing.

1

1

1

1

6

6

1

1

1

1

7

7

1

1

1

1

2

2

3

3

1

1

1

1

1

1

3

3