Transparent Peer Review in Higher Education: Lessons for Computer Science

-

Recently, the European Journal of Higher Education (EJHE) became the first journal in its field to adopt a Transparent Peer Review (TPR) policy, beginning in April 2023. The policy publishes anonymous peer review reports alongside accepted articles, allowing readers to see the feedback and revisions that shaped the final paper. After 18 months, the journal conducted surveys of authors and reviewers, and the results provide valuable insights that resonate strongly with the ongoing debates in computer science peer review.

This article summarizes the key findings of the EJHE case study and discusses their implications for computer science researchers.

Key Findings from EJHE’s Transparent Peer Review

Awareness and Experience

- 62% of authors and 41% of reviewers already had prior experience with transparent peer review.

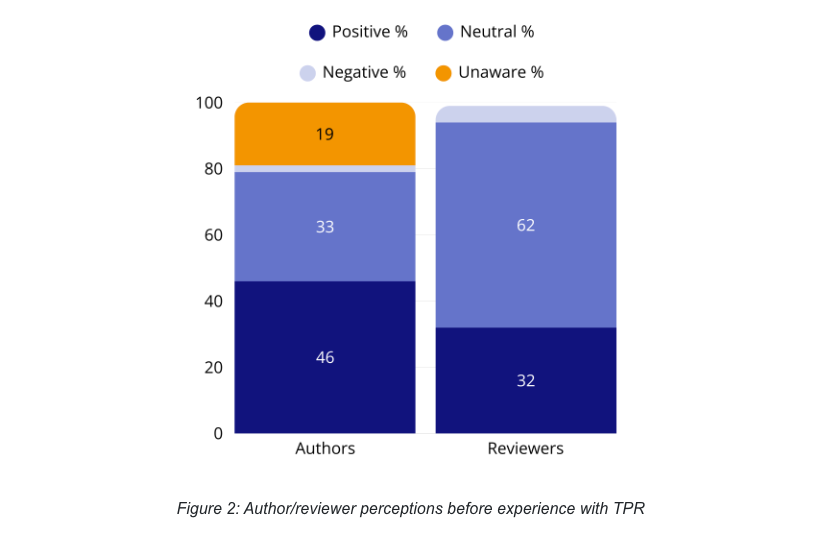

- Before engaging with EJHE’s policy, 46% of authors and 32% of reviewers had a positive perception of TPR.

Positive perception before TPR experience

Author Satisfaction

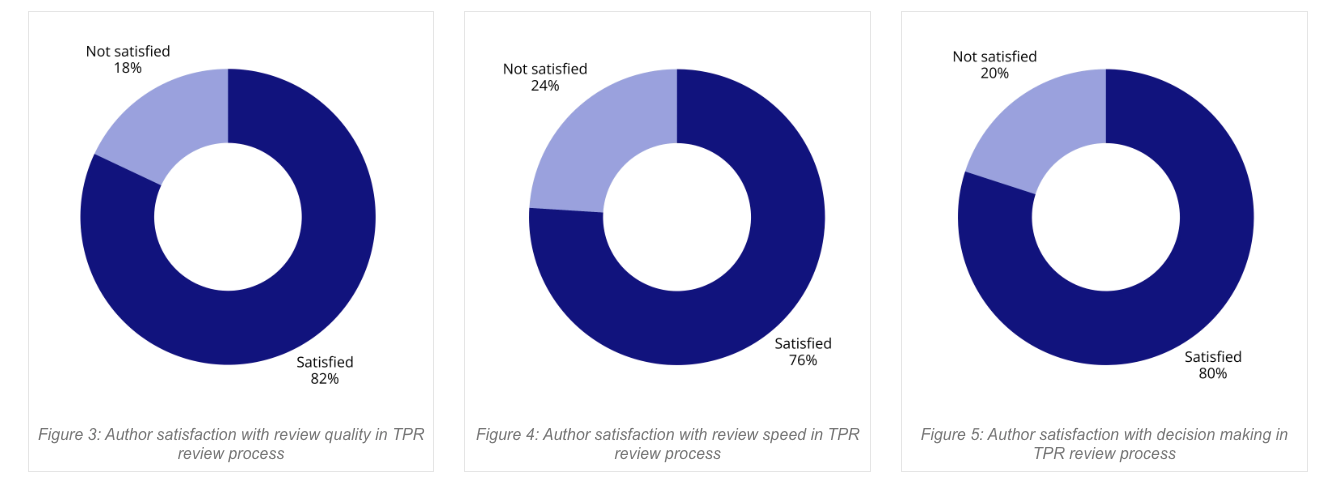

After engaging with TPR at EJHE:

- 82% of authors were satisfied with review quality.

- 76% were satisfied with review speed.

- 80% were satisfied with decision making.

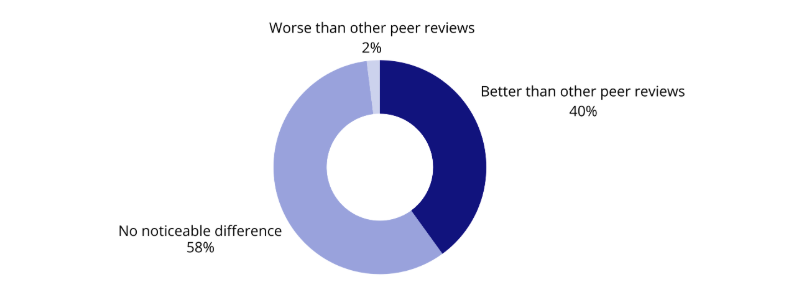

When comparing TPR to previous review processes:

- 40% said TPR was better,

- 58% noticed no difference,

- Only 2% thought it was worse.

Reviewer Perspectives

- 76% of reviewers preferred not to have their names included.

- 86% said transparency had no impact on how they wrote their reviews.

- Only 24% indicated that TPR would influence their future decision to review.

Please see charts here: https://insights.taylorandfrancis.com/research-impact/a-case-study-on-implementation-and-impact-of-transparent-peer-review-tpr/

Future Intentions

- 73% of authors were very likely to submit again.

- 94% said the TPR policy had no negative influence on their decision to submit.

- 40% of authors indicated TPR had a positive influence on their willingness to review.

Please see charts here: https://insights.taylorandfrancis.com/research-impact/a-case-study-on-implementation-and-impact-of-transparent-peer-review-tpr/

Qualitative Feedback

Authors praised TPR for:

- High-quality, actionable reviewer comments.

- Professional, constructive review tone.

- Improved quality of final papers.

Usage data reinforces this: peer review reports averaged 141 views and 63 downloads per article, showing strong community interest in reviewer feedback.

Implications for Computer Science Domain

1. Increasing Trust in the Review Process

Computer science has faced repeated concerns about review quality, transparency, and fairness, particularly in large conferences (NeurIPS, ICML, CVPR). TPR offers a concrete mechanism to:

- Increase accountability by showing how papers were evaluated.

- Provide a learning resource for early-career researchers, who can study real reviews.

2. Preserving Anonymity While Sharing Insight

The EJHE experience highlights a key tension: reviewers overwhelmingly want to remain anonymous (76% opposed naming). This finding aligns with CS researchers’ fears of retaliation or reputational risk in competitive fields.

For CS venues, adopting TPR without revealing identities seems both feasible and widely acceptable.

For CS venues, adopting TPR without revealing identities seems both feasible and widely acceptable.3. Reviewer Workload and Incentives

A common worry is that transparency could increase reviewer burden. However, 86% of reviewers said their approach did not change. This suggests that TPR can be introduced without harming reviewer efficiency.

Further, public review reports could be integrated into reviewer recognition systems (e.g., ORCID, Publons), providing credit without breaching anonymity.4. Impact on Submissions

In computer science, authors often worry about bias or inconsistency in reviews. The EJHE data show 40% of authors found TPR better than traditional processes, and 94% were not negatively influenced in submission decisions. This suggests TPR could help retain authors in top CS venues by signaling rigor and fairness.

Potential Takeaways for CS Peer Review Reform

The EJHE case study offers strong evidence that:

- TPR improves transparency and trust without discouraging submissions or reviews.

- Reviewer anonymity remains crucial.

- Usage metrics confirm that published reviews are valued by the wider research community.

For computer science, where conference review crises (e.g., overloaded reviewer pools, inconsistent feedback) are frequent topics of debate, Transparent Peer Review could be a pragmatic reform.

It balances openness with practicality and may address long-standing calls for greater accountability in our field.

Final Thoughts

The EJHE study demonstrates that Transparent Peer Review is not just an idealistic vision but a workable model that benefits authors, reviewers, and readers alike.

As computer science grapples with scaling peer review for massive conferences and journals, TPR offers a way to:

- Build trust in the process,

- Encourage constructive, professional reviewing, and

- Provide the community with valuable insights into the scholarly dialogue behind published work.

Perhaps it’s time for CS conferences and journals to experiment with TPR pilots — starting small, but with the potential for transformative impact.