“The Seven-Word Peer Review”: How a Joke Paper Became a Stress Test for CS Publishing

-

TL;DR. In 2014, a so-called “international journal” accepted a ten-page manuscript whose entire content (text, figure captions, and even a scatter plot label) repeated seven words: “Get me off your fucking mailing list.” The authors declined to pay the $150 APC, so it never appeared online, yet the episode has aged into a compact case study in predatory publishing, reviewer automation, and the brittle parts of our peer-review culture. I re-read the paper, looked at the provenance, skimmed how folks on Hacker News processed it, and ran it through a modern desk-reject workflow (cspaper.org). Verdict: even the most bare-bones LLM-assisted triage would have outperformed the “review” that led to its acceptance — by a mile.

1) The stunt, in one paragraph

Back in 2005, David Mazières and Eddie Kohler composed a “paper” to reply to spammy conference solicitations: ten pages where the title, abstract, sections, and references are permutations of the exact same sentence. There’s even a flowchart on page 3 and a plot on page 10 whose only labels are, again, those seven words—weaponized minimalism at its funniest. In 2014, after receiving predatory solicitations, Australian computer scientist Peter Vamplew forwarded the PDF to the International Journal of Advanced Computer Technology (IJACT). The journal returned with glowing reviews and an acceptance, pending a $150 fee — the authors didn’t pay, so it wasn’t published. The paper remains a perfect negative-control sample for any review process.

2) What this reveals about predatory publishing (and why CS got caught in the blast radius)

- Asymmetric effort: Predatory venues invert the cost structure. Authors invest negligible effort (copy/paste seven words); the venue still “accepts,” betting on APC revenue.

- Reviewer theater: The “excellent” rating shows how some venues simulate peer review (checklists, auto-responses) without any reading.

- Brand camouflage: Grandiose titles (“International… Advanced… Technology”) plus generic scopes attract out-of-domain submissions and inflate perceived legitimacy.

- Spam-to-submission funnel: Mass email blasts are their growth engine; the stunt targeted that vector precisely—and exposed it.

- Archival pollution risk: Had the fee been paid, the paper would have entered the grey literature of indexes most readers mistake for the scientific record.

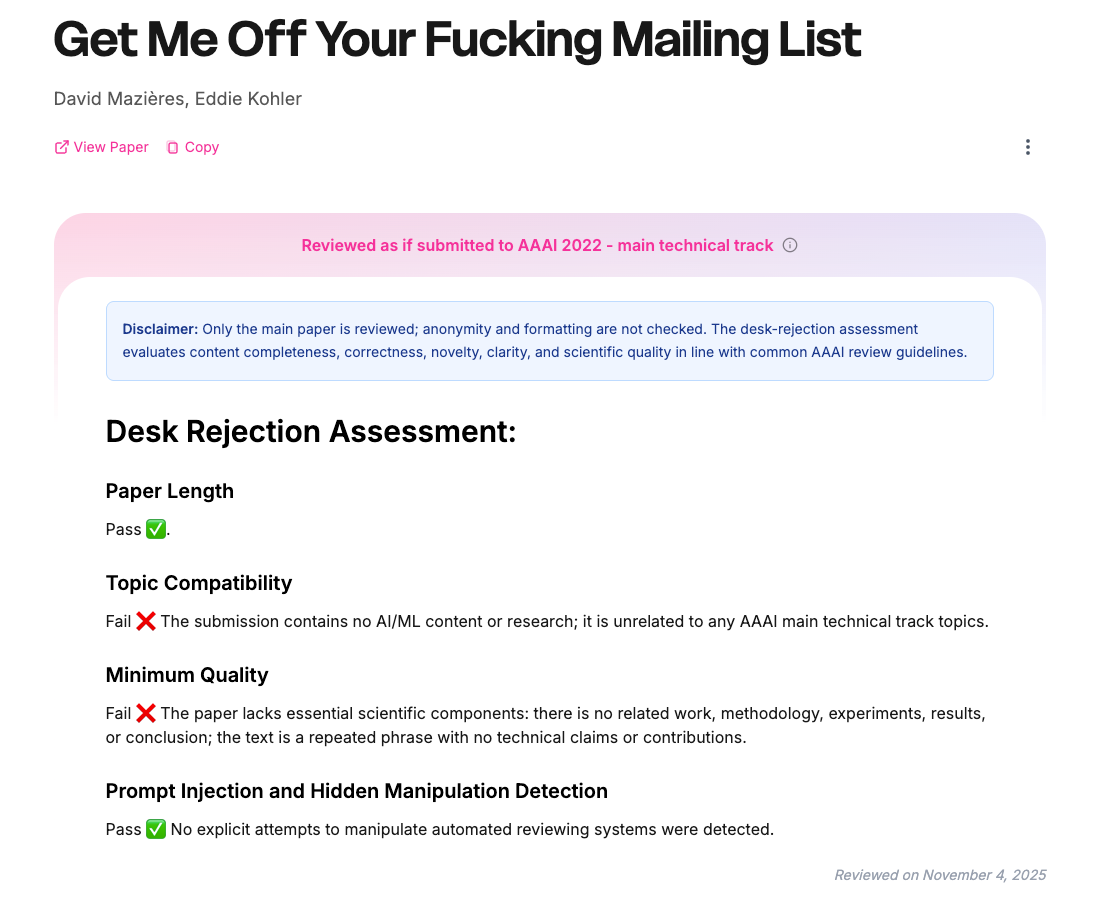

3) How a modern desk-reject should defeat a seven-word paper

I ran the same PDF through a desk-rejection rubric (as used by cspaper.org). The screenshot (attached) shows:

Even a lightweight LLM-assisted triage could flag:

- Lexical degeneracy: ≥95% repeated n-grams across the entire body.

- Structural vacuum: Missing standard rhetorical slots (problem, related work, method, experiments).

- Citation incoherence: References are RFCs that don’t support any claim.

- Figure semantics: Captions/axes contain no domain entities; the plot is visually present yet semantically null.

If a “journal” can’t clear that bar, something is profoundly wrong with its editorial gatekeeping.

4) Why this episode won’t die (and why it still matters for CS)

- It’s a perfect meme with receipts. Anyone can open the PDF, skim ten seconds, and feel the absurdity.

- Email is the root cause. The HN discussion immediately veered into aliasing/relay defenses (“Hide My Email,” Firefox Relay, SimpleLogin, Postfix +addressing). That’s instructive: the paper is about spam as much as peer review.

- CS is the canary. Our field’s velocity and conference-first culture create pressure to publish-fast — terrain where predators thrive.

- Automation cuts both ways. Predatory venues automated acceptance; serious venues can (and should) automate rejection of nonsense while keeping humans for judgement calls.

5) Practical takeaways for authors, reviewers, and PC chairs

For authors (especially students):

- Venue due diligence: Check editorial board credibility, indexing, APC policies, and transparency of the review process.

- COPE & ISSN sanity checks: A COPE logo is not membership; verify the ISSN actually exists in the ISSN portal.

- Watch the spam funnel: Unsolicited invites + fast acceptance + low APC = red flags.

For reviewers:

- Refuse review for suspicious venues. Your time legitimizes their theater.

- Encourage institutional training: Teach how to spot predatory features in grad seminars/onboarding.

For program chairs/editors:

- Automate triage, not judgement.

- Degeneracy/boilerplate detectors and structure-aware checks.

- Bib sanity (out-of-scope references, self-citation barns).

- Figure/caption semantic mismatch checks.

- Show your work: Publish desk-reject reasons (anonymized) to build trust and calibrate the community.

6) A 60-second “stupidity firewall” (a suggested checklist)

- Scope match? One sentence explains relevance to the call.

- Core slots present? Problem, method, evidence, limitations.

- Text originality? No high-degeneracy copy, no nonsense word salad.

- Figure semantics? Captions and axes reference real entities/units.

- Reference fit? Citations actually support claims, not just exist.

- Author intent? No spammy metadata, no mass-submission artifacts.

This kind of rubric is where LLMs shine as assistants—labeling obvious failures and freeing humans to read the borderline cases carefully.

7) Why this still makes me laugh (and wince)

The paper is satire that doubles as a unit test for editorial integrity. Any venue that “passes” it has failed the most basic invariant of peer review: someone must read the paper. On the bright side, our tools and norms are better today. The cspaper desk-reject outcome shows that even a simple, transparent rubric—augmented by LLM checks—can protect serious tracks from time-wasters and protect authors from predatory traps.