AAAI 2026 First-Round Results: High Scores Rejected, AI Reviews Useful?

-

AAAI 2026 First-Round Results: High Scores Rejected, AI Reviews Surprisingly Useful

The highly anticipated first-round review results for AAAI 2026 are now out, sparking heated discussion across the research community. Social media timelines are filled with frustration, disbelief, and endless question marks.

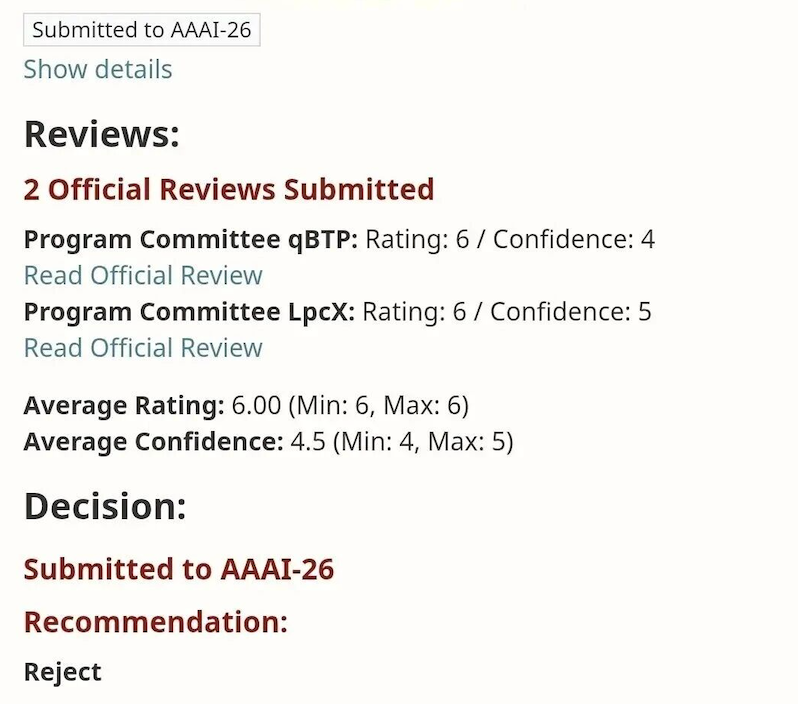

Researchers reported cases where papers with average scores of 5.5–6.5 were rejected, and even submissions scoring as high as 6.7 did not make the cut. Alarmingly, some colleagues in the Computer Vision (CV) track with an average of 7 also faced rejection—fueling concerns about “score inflation.”

Odd Review Comments and Rejections

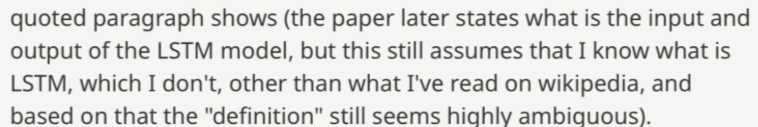

Every top-tier conference has its share of unusual or controversial reviews. This year’s AAAI did not disappoint. One reviewer famously asked:

“Who is LSTM?”

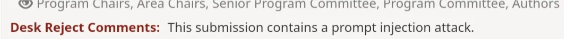

In addition, some authors attempted prompt injection attacks to fool reviewers. These papers were desk-rejected outright.

A Hybrid Review Process: 3 Humans + 1 AI

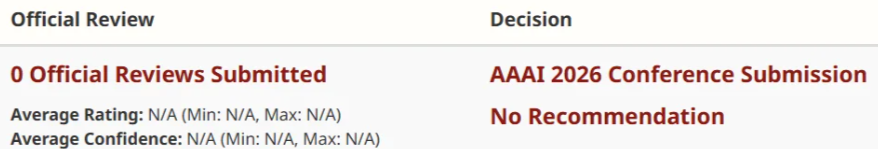

For 2026, AAAI adopted a hybrid review system: three human reviewers plus one AI-generated review. However, the actual number of reviews received by authors varied: some papers got only 2 reviews (likely reviewer shortage), while others received as many as 5 (late submissions led to emergency assignments and then the original reviewers also filed).

Despite initial skepticism, many authors admitted that the AI-generated reviews were unexpectedly helpful, useful as a checklist, albeit with intimidatingly long reference lists.

See this post for more details: https://cspaper.org:443/post/376

Score Variance and SPC Overrides

This cycle saw large variance in scores. Surprisingly, some papers with an average score of 4 still passed the first round, likely after SPCs read the paper carefully and deemed a more favorable evaluation appropriate.

Authors who passed cannot yet view detailed scores; only reviewers can see them. Email notices about second-round progression typically arrive a day or two later. However, if you see the following, it is likely you have PASSED!

Adjusting Expectations: Lower Acceptance Rates Ahead

Given this year’s record-breaking submission volume, authors are advised to lower acceptance expectations. Yes—there are realistic scenarios where all-positive reviews are still rejected in the first or even second phase.

The pragmatic strategy: when rejected, resubmit quickly to suitable venues.

Alternative Venues for Resubmission

-

ICLR 2026

Abstract deadline: September 19, 23:59 AoE; last year’s acceptance rate reached 32.08% (historic high). “Ticketing” is required and had already exceeded 5,000 prior to NeurIPS notifications — expect a post-NeurIPS spike.LLM policy: ICLR allows LLM assistance with disclosure; authors remain responsible for the content.

-

CVPR 2026

Based on last year’s cycle, registration was around Nov 8: there’s time to polish. -

WWW 2026

Sept 30 (abstract) and Oct 7 (full paper) are tight but feasible.

Community-Reported Phase-1 Outcomes (with Scores)

The table below aggregates score-stamped, user-reported cases (authors and reviewers) from public discussions. It complements the narrative above and helps calibrate expectations across areas. User identities are omitted for privacy.

Note: Two official AAAI notes this year also emphasized integrity screening (plagiarism/similarity and potential reviewer–author collusion) and AI-assisted review quality flags; these contextually explain some unexpected outcomes even at high scores.# Scores (per paper) Phase-1 Decision Area/Track One-line takeaway C01 7/6/5 Reject Unspecified High score rejected; one review alleged as “malicious low.” C02 6 Reject Unspecified From a batch of four (6/5/5/3), all four rejected after scrutiny. C03 5 Reject Unspecified Ditto (batch case). C04 5 Reject Unspecified Ditto (batch case). C05 3 Reject Unspecified Ditto (batch case). C06 7/4/7 Reject Unspecified First paper by a junior author; couldn’t clear the bar. C07 7.5 (avg) Pass Unspecified Clean pass; author starting implementation for phase-2. C08 7/8/3 Pass Unspecified Insight praised; one harsh “3” overruled by stronger reviews. C09 3 (borderline) Pass Unspecified Messy write-up and thin experiments—but still advanced. C10 5/6/7 Reject Unspecified Rejected; complaint that appendix wasn’t read. C11 7/6/5 Reject Unspecified Highest in a reviewer’s batch still failed round-1. C12 8/6/6 Reject CV-leaning Perceived hostility to certain subareas this year. C13 7/6/6 Reject Unspecified Reviewer baffled why this was sent for review if pre-decided. C14 2/2/3 Reject Unspecified Formatting issues & suspected AI-generated text; no figures/codes. C15 6 Pass Unspecified Reviewer gave 6; both their own paper and the one they reviewed passed. C16 5/4/6 Pass Continual Learning from reviewer C17 6/6/5 Pass Watermarking from reviewer C18 4 Pass Graph Learning from reviewer C19 3/5/4 Reject Peer-Review Generation from reviewer C20 3/4 Reject Graph Learning from reviewer C21 6/5/6/5 Reject Unspecified Another batch observation: “mean” scoring trends across reviewers. C22 7/7/4/3 Mixed → 2 Pass Unspecified Same reviewer’s batch: the “7/8/3” paper and a “3 (BL)” paper advanced. Interpretation tips:

- High-score rejections (e.g., 7/6/5; 8/6/6; 7/6/6) were not rare this round.

- Borderline passes (e.g., “3 (BL) → pass”) indicate SPC/meta-level overrides or differing thresholds by area.

- Integrity checks and AI-assisted quality flags this year may have amplified both desk rejects (e.g., injection attempts) and unexpected outcomes.

Finally ...

AAAI 2026 has demonstrated a trend of score inflation and unpredictability. Even excellent papers faced harsh rejections, while the hybrid review process produced both frustration and surprising insights.

But this is not the end — just part of the research journey. For those disappointed by AAAI’s first-round results, opportunities abound at ICLR, CVPR, WWW, and beyond. Adjust expectations, refine the work, and move forward.

As one researcher noted: “No matter what happens at AAAI, this is not the final destination. Summarize your lessons, stay motivated, and we’ll meet at the next top-tier venue.”

Wishing everyone an abundance of accepts ahead!

-